Preparation of data for processing by Artificial Intelligence(AI) Large Language Models (LLM) models is of utmost importance, as models heavily depend on data vectorization, called embeddings. You have to have space of vectors to use Artificial Intelligence, like put all vectors into one football field, and also understand how they are related. Think of this as unpacking your brain into a football field, just keeping it connected on multiple layers.

There are many known methods, and the best models today use also tensor modeling in text processing for high-dimensional embeddings. The reason is that one word, like WHY, cannot have efficient predictions of what should follow, and model needs context around how word is used and its meaning.

ChatGPT likely uses embeddings derived from models like text-embedding-ada-002, which generate contextual word and sentence embeddings using transformer architectures like GPT and BERT. These embeddings capture semantic relationships, meaning similar words or phrases have closer embeddings in the vector space.

You may want to use your GPT model to learn more about Word2Vec, BERT, PCA (Principal Component Analysis), DeepWalk, Node2Vec, Glove, which do provide different embeddings, some are very primitive yet effective for understanding. Also, look at NVIDIA Morpheus Cybersecurity solutions if you are thinking next level of Sequencing (Grouping) and Labeling (classification of normal and abnormal).

OpenAI used at some point “text-embedding-ada-002” text embedding model where whole sentences, paragraphs, or even entire documents are vectorized rather than individual words. The model captures the semantic meaning of the input as a single 1536-dimensional vector, rather than assigning separate vectors to each word.

Key Features:

- High-dimensional embeddings (1536-dimensional vector), which means much better accuracy in predictions for text with higher generation speed.

- Efficient & cost-effective compared to previous models, as feed forward and models working on tensor flows do need attention, which means also computation of tensors indside of the model, which requires power and time.

- Supports large-scale retrieval tasks, as high dimensional vectors can much faster provide answers and summaries to questions

- Can process a variety of text formats (short phrases, documents, code, etc.)

How it Works:

- If you input a single word, the model will produce an embedding capturing its meaning in context.

- If you input a sentence or paragraph, the model will encode the entire text as a single vector that represents its overall semantic content.

- Longer inputs (e.g., documents) are truncated or split into smaller chunks to fit the token limit (8192 tokens as of the latest update).

Transformer and relationship to tensor

A Transformer itself is not a tensor, but it processes tensors as part of its architecture. The transformer is often a Multilinear Transformation from a set of domain Tensor Manifolds to a range of Tensor manifolds. (Important: we take more inputs to one output) Let’s break it down:

Embedding Artificial Intelligence in Modern Applications

- A Transformer is a deep learning model architecture designed for NLP tasks. It consists of layers that manipulate numerical data (tensors).

- A tensor is a multi-dimensional numerical array (like a matrix) used to represent data in neural networks.

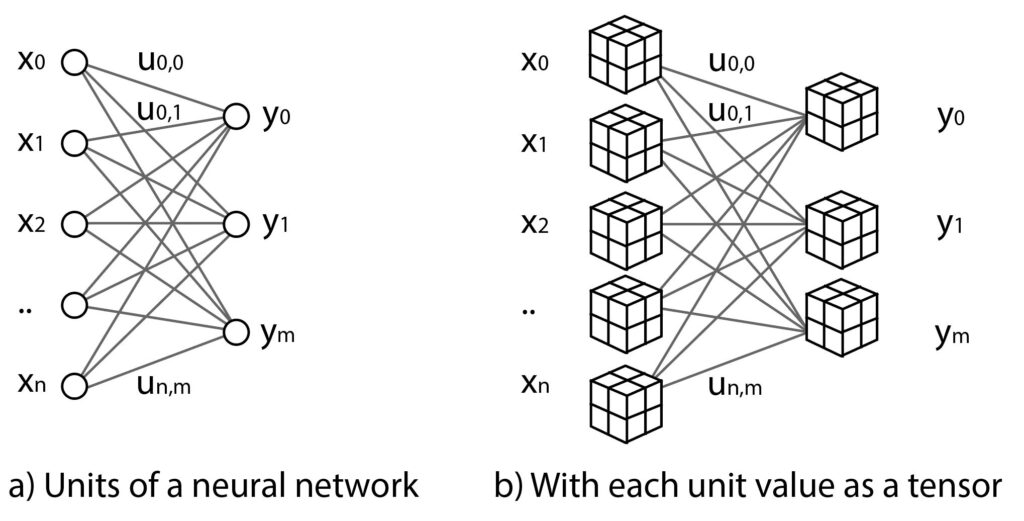

Let’s look in very simple way at the Neural Network model with tensors as Node values, meaning the value is calculated at every node, so we can understand why tensors at every point in feed-forward neural networks are essential. By replacing each unit component with a tensor, the network is able to express higher dimensional data such text in NLP, images or videos. Of course, this is very simplistic in relation to layers in GPT transformer.

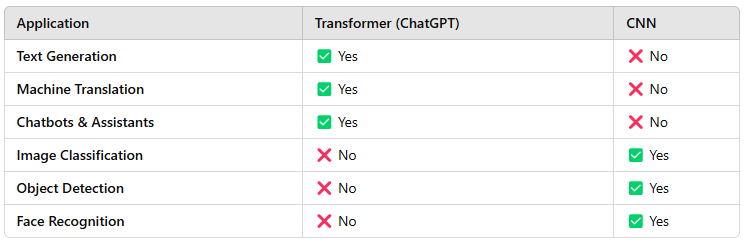

For a quick understanding, my focus is on transformer-based neural networks with GPT, and not on CNNs (Convolutional Neural Networks) or RNNs (Recurrent Neural Networks). Transformers have the advantage of having no recurrent units, therefore requiring less training time than earlier recurrent neural architectures (RNNs), called recurrent neural networks.

CNNs have been tried for NLP tasks, but they struggle with long-range dependencies in text. They work best for short patterns, like identifying simple word features, but they lack a global understanding of sentences like Transformers do.

- CNNs capture only local information, meaning they cannot model relationships between words far apart in a sentence.

- Transformers (like ChatGPT) capture global dependencies through self-attention, which is crucial for language tasks.

In reference to RNNs, the original transformer model used an encoder-decoder architecture. The encoder consists of encoding layers that process all the input tokens together one layer after another, while the decoder consists of decoding layers that iteratively process the encoder’s output and the decoder’s output tokens.

The purpose of each encoder layer is to create contextualized representations of the tokens, where each representation corresponds to a token that “mixes” information from other input tokens via a self-attention mechanism. The attention layer is very important, and In simple terms, attention scores different parts of an input based on their relevance to a given task, allowing the model to prioritize important information over irrelevant details

Both the encoder and decoder layers have a feed-forward neural network for additional processing of their outputs and contain residual connections and layer normalization steps. These feed-forward layers contain most of the parameters in a Transformer model.

How Transformers Use Tensors

When you input text into a Transformer (like text-embedding-ada-002), it goes through these stages:

- Tokenization → Converts words/sentences into numerical tokens.

- Embedding Layer → Maps tokens to dense vectors (tensors).

- Transformer Layers (Self-Attention + Feedforward) → Process these tensors, applying matrix multiplications and non-linear transformations.

- Output Layer → Produces a final tensor representation (like an embedding or a prediction).

Key Takeaways from more advanced embedding with transformer:

- Words are not embedded separately; they contribute to the meaning of the whole input.

- Context matters—the same word in different sentences may have different embeddings.

- For word-level analysis, you need to split text into separate words and embed each separately (not ideal with text-embedding-ada-002 since it’s optimized for sentence-level meaning).

Cosine similarity then can be calculated as the dot product of two vectors and divided by the norm of these vectors.

Step 1: Tokenization

We first tokenize both sentences into words/subwords:

- Text 1:

"Cybersecurity log analysis is crucial for threat detection."- Tokens:

["Cybersecurity", "log", "analysis", "is", "crucial", "for", "threat", "detection"]

- Tokens:

- Text 2:

"Threat detection relies on analyzing security logs effectively."- Tokens:

["Threat", "detection", "relies", "on", "analyzing", "security", "logs", "effectively"]

- Tokens:

Step 2: Embedding Each Word

Each token is converted into a dense vector representation (e.g., 300-dimensional if using Word2Vec, 768-dimensional if using BERT). This creates a word embedding matrix, where each row represents a word vector.

Step 3: Creating the Sentence Embedding Matrix

For a sentence, we stack the word embeddings together, forming a matrix of shape:

(Number of Tokens) × (Embedding Dimension)

Text 1 Matrix (8 × 1536)

Text 2 Matrix (8 × 1536)

If using transformers , we get contextual embeddings, meaning the vectors change based on the sentence context.

Step 4: Aggregating Sentence Embeddings

To represent the entire sentence as a single vector, common methods include:

- Averaging all word vectors → Mean pooling.

- Taking the vector of the [CLS] token (BERT-style models).

- Using an attention mechanism to create a weighted representation.

After applying mean pooling, both sentences will be represented by one 1536-dimensional vector each, making it easy to compare them using cosine similarity.

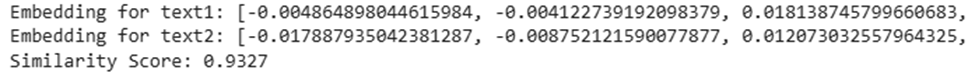

Here is a simple generated python code from ChatGPT to see above in the action, where we generate embeddings, and calculate cosine similarity of two sentences, text1 = “Cybersecurity log analysis is crucial for threat detection.“, text2 = “Threat detection relies on analyzing security logs effectively.“

import openai

# Set your OpenAI API key

OPENAI_API_KEY = "Your API ChatGPT Key"

# Initialize OpenAI client

client = openai.OpenAI("Your API ChatGPT Key")

# Function to get embeddings

def get_embedding(text, model="text-embedding-ada-002"):

response = client.embeddings.create(

input=text,

model=model

)

return response.data[0].embedding # Returns a list of float values

# Example texts

text1 = "Cybersecurity log analysis is crucial for threat detection."

text2 = "Threat detection relies on analyzing security logs effectively."

embedding1 = get_embedding(text1)

embedding2 = get_embedding(text2)

print(f"Embedding for text1: {embedding1[:100]}...") # Print first 100 values

print(f"Embedding for text2: {embedding2[:100]}...")

from numpy import dot

from numpy.linalg import norm

def cosine_similarity(vec1, vec2):

return dot(vec1, vec2) / (norm(vec1) * norm(vec2))

similarity_score = cosine_similarity(embedding1, embedding2)

print(f"Similarity Score: {similarity_score:.4f}")Example of output embeddings and cosine similarity calculation from Google Collab’s with run code above:

As you can see, both texts “Cybersecurity log analysis is crucial for threat detection.” and “Threat detection relies on analyzing security logs effectively.” are similar but not identical. LLM AI system now understands better if such text could be used in a sentence for your prompt.